Using quickwit as a web search engine in your website

Happy new year 2026 🎉 . Let's hope this year will be full of professional and personal success for everyone.

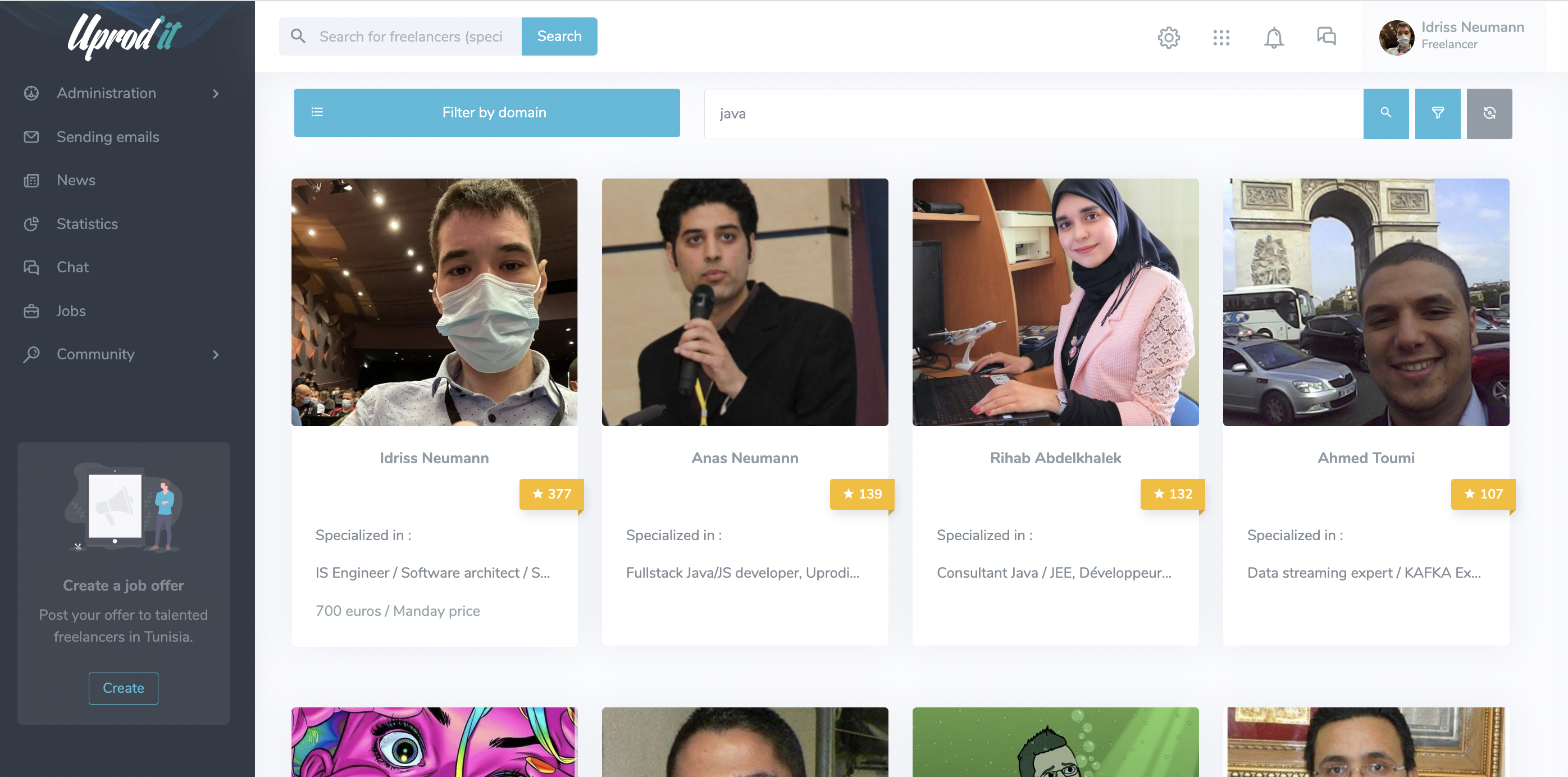

Twelve years ago, a long time before the creation of CWCloud, we launched uprodit.com, a social network dedicated to Tunisian freelancers.

The platform was developed in Java, using the Spring framework, Tomcat, and Vert.x later. One of our main ambitions was to build an intelligent search engine capable of leveraging the information users freely entered in their profiles. At the time, achieving this level of flexibility and relevance was extremely challenging with traditional relational databases RDBMS1.

That's why it was my second experience with Elasticsearch in production (before the product focused on observability area) after few years with Apache SolR and it worked like a charm.

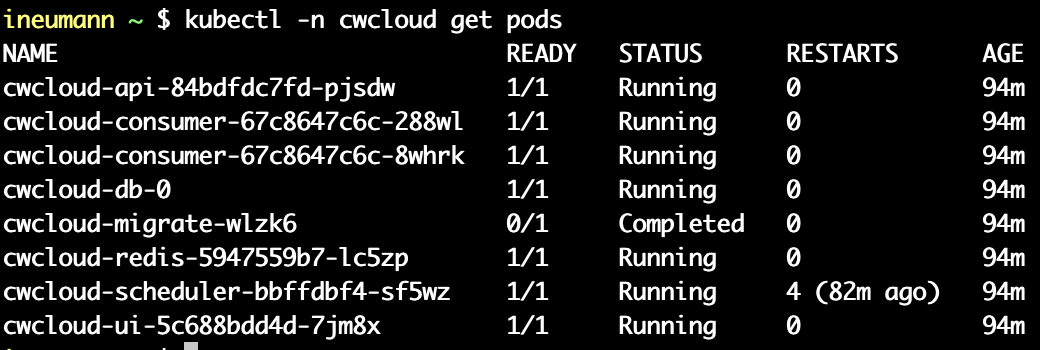

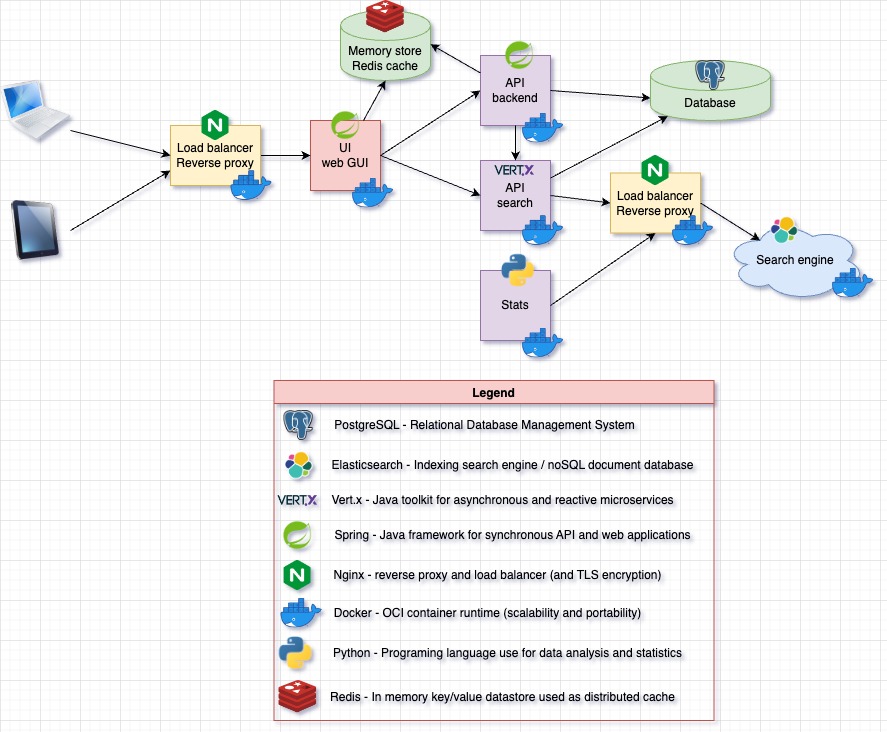

After the launch of CWCloud, uprodit migrated from physical servers to Scaleway instances using CWCloud. At the same time, we started modernizing the backend by adopting container-based architectures. However, the cost of maintaining an Elasticsearch cluster gradually became too expensive for the organization. Here was the technical architecture of the project at that time:

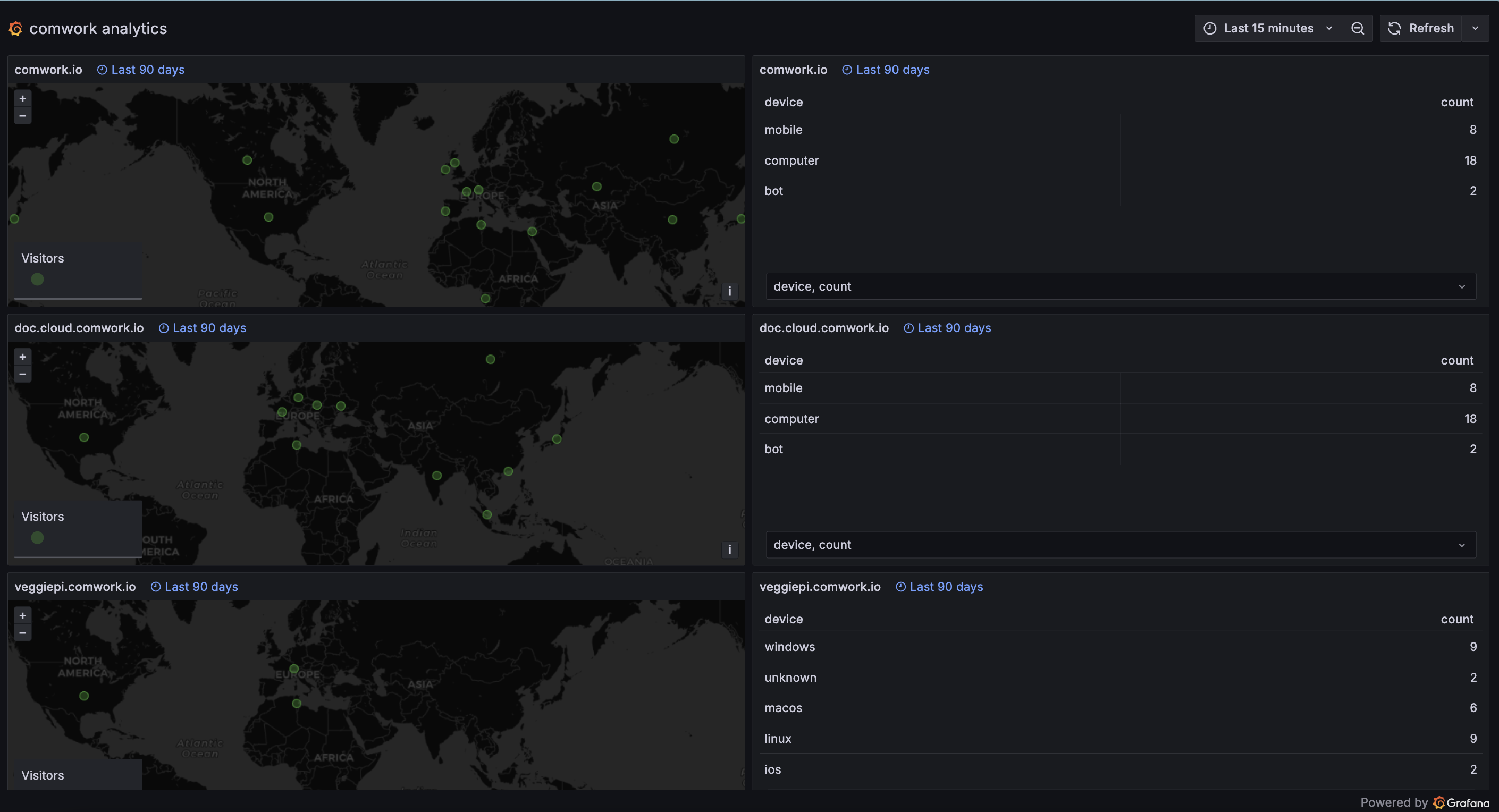

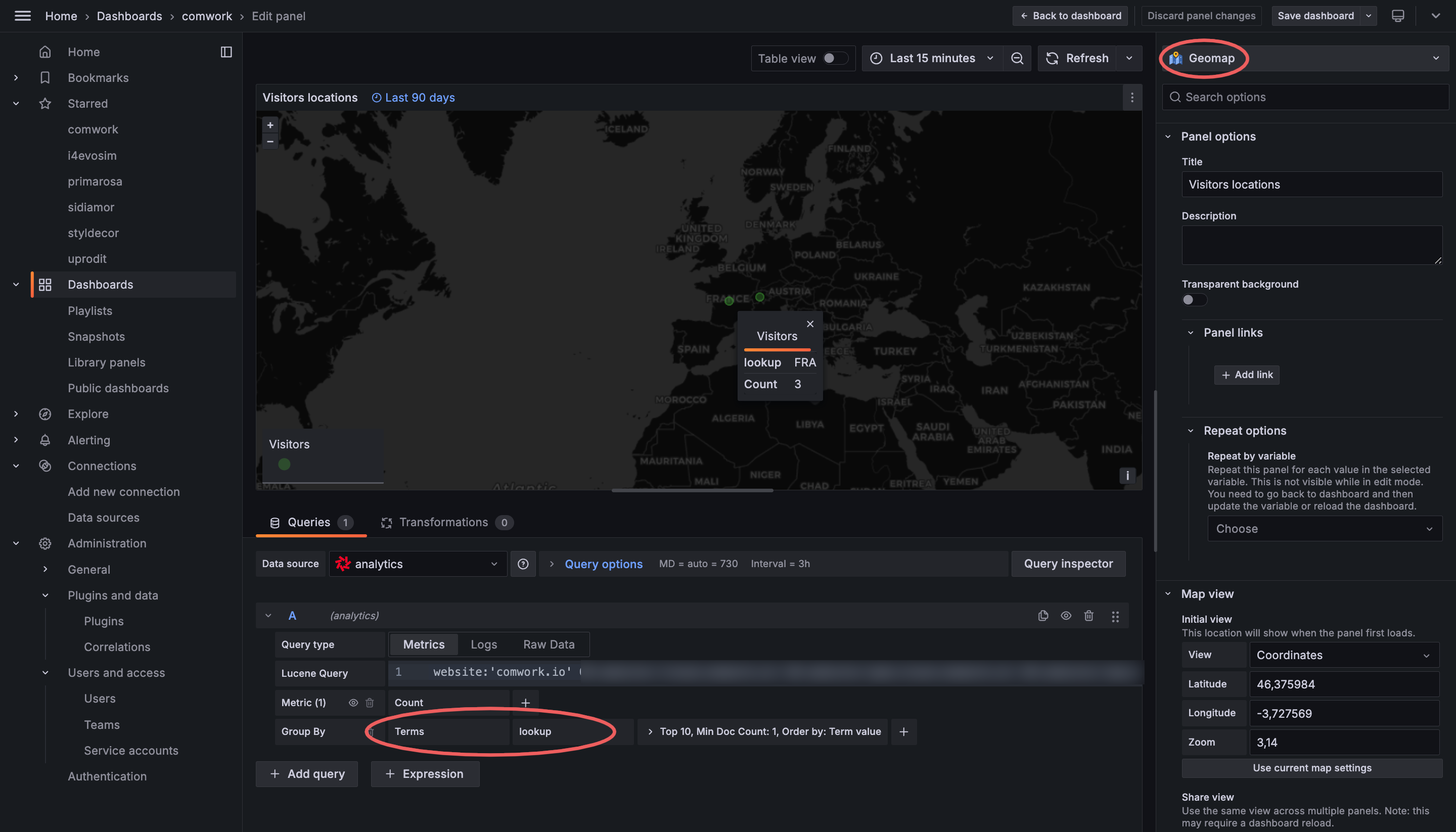

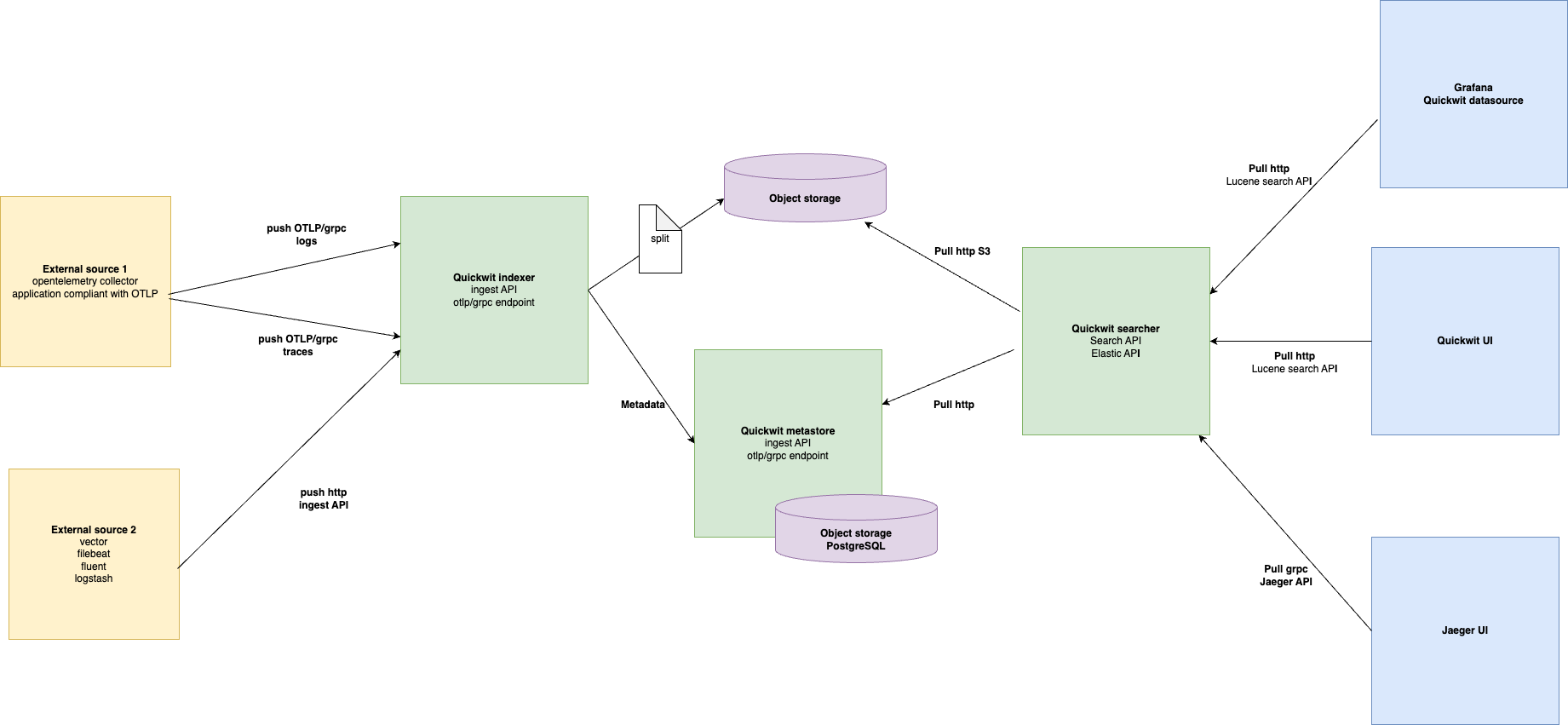

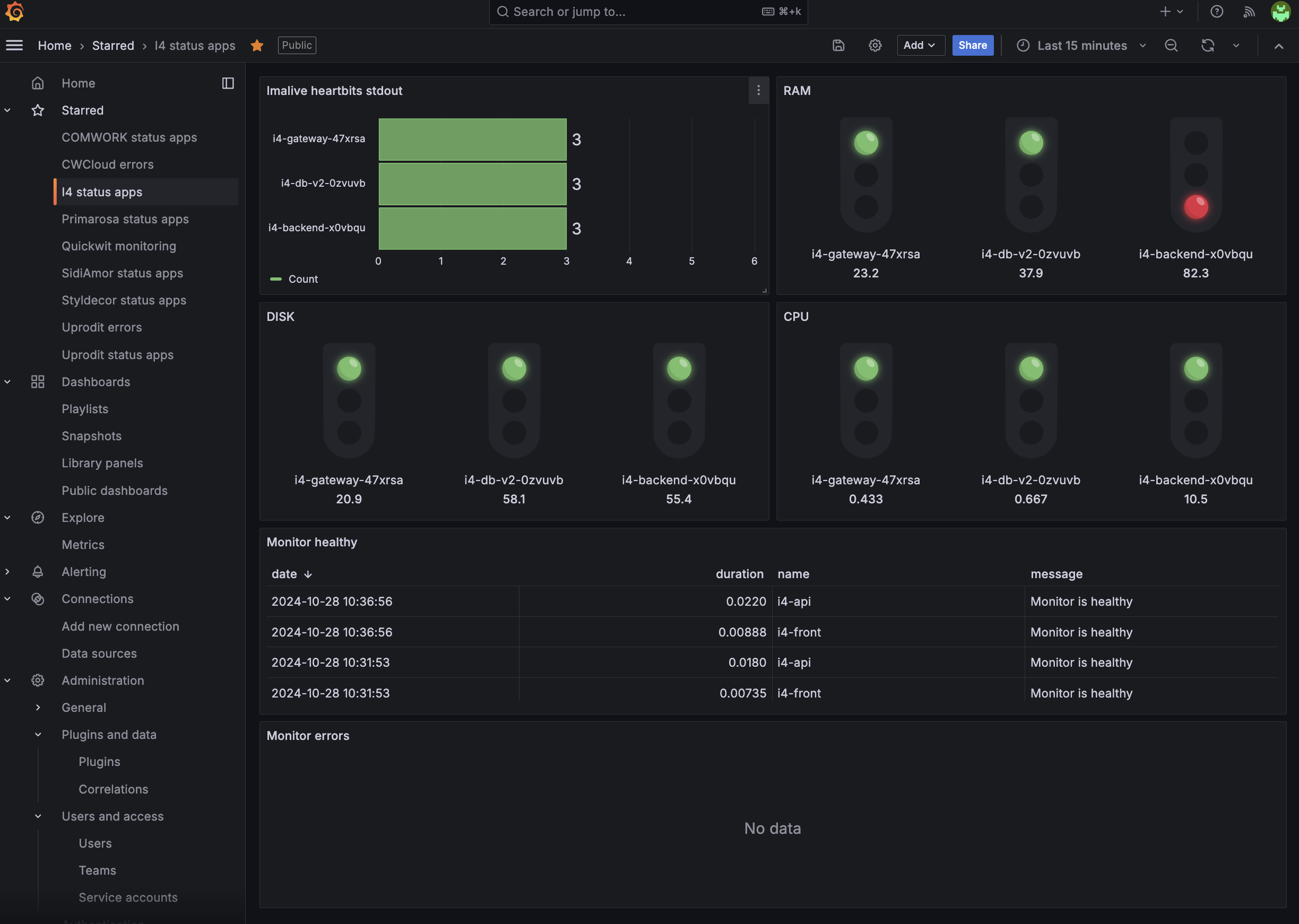

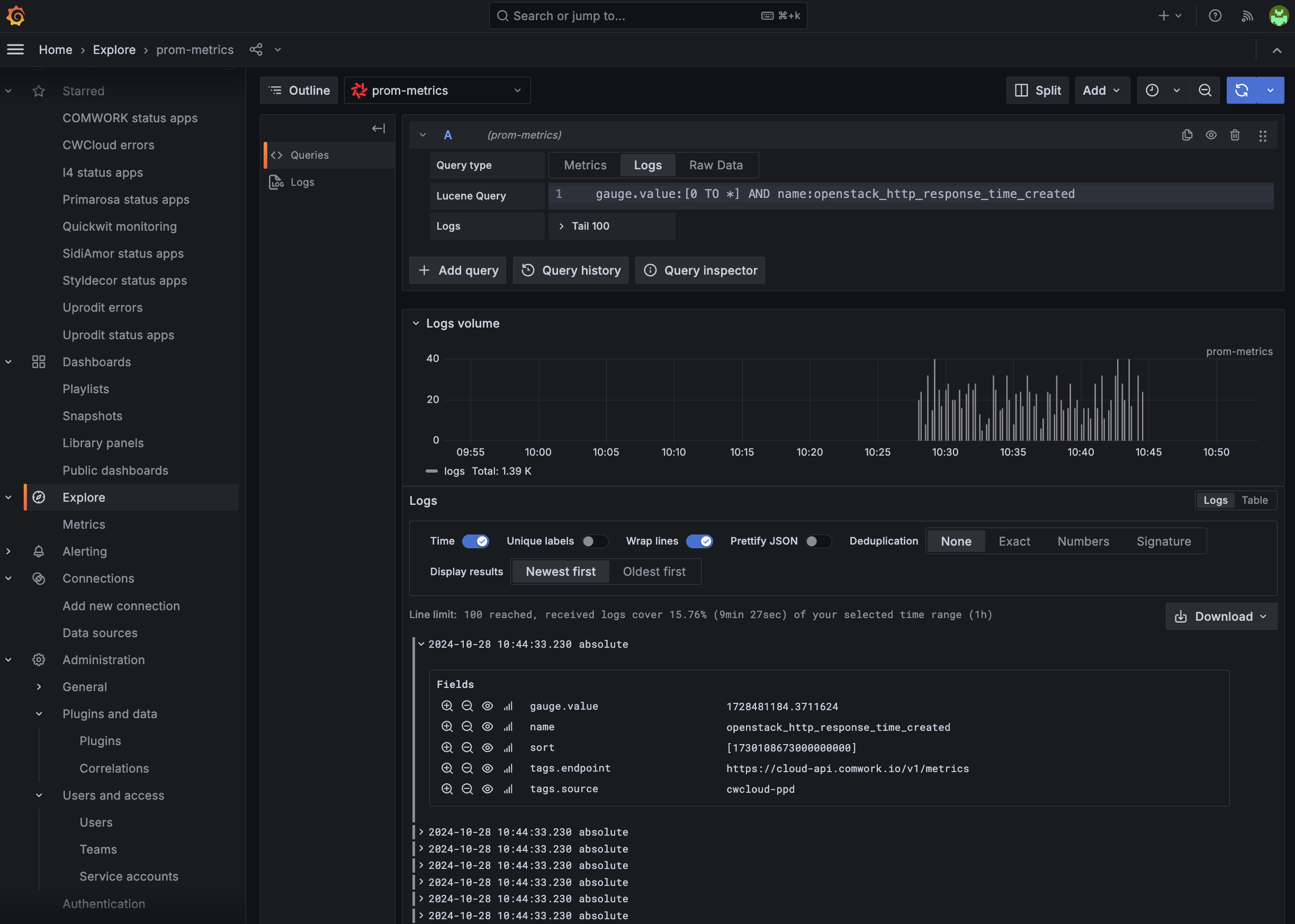

One year ago, we started working with Quickwit2 and replaced our entire observability and monitoring stack with it. This included logs, traces, Prometheus metrics, and even web analytics.

Although Quickwit wasn't originally designed to serve as a web search engine, our experience with metrics and analytics showed that it could effectively meet our needs while significantly reducing infrastructure costs (because of the less amount of RAM consumption and the fact that the indexed data are stored on object storage).

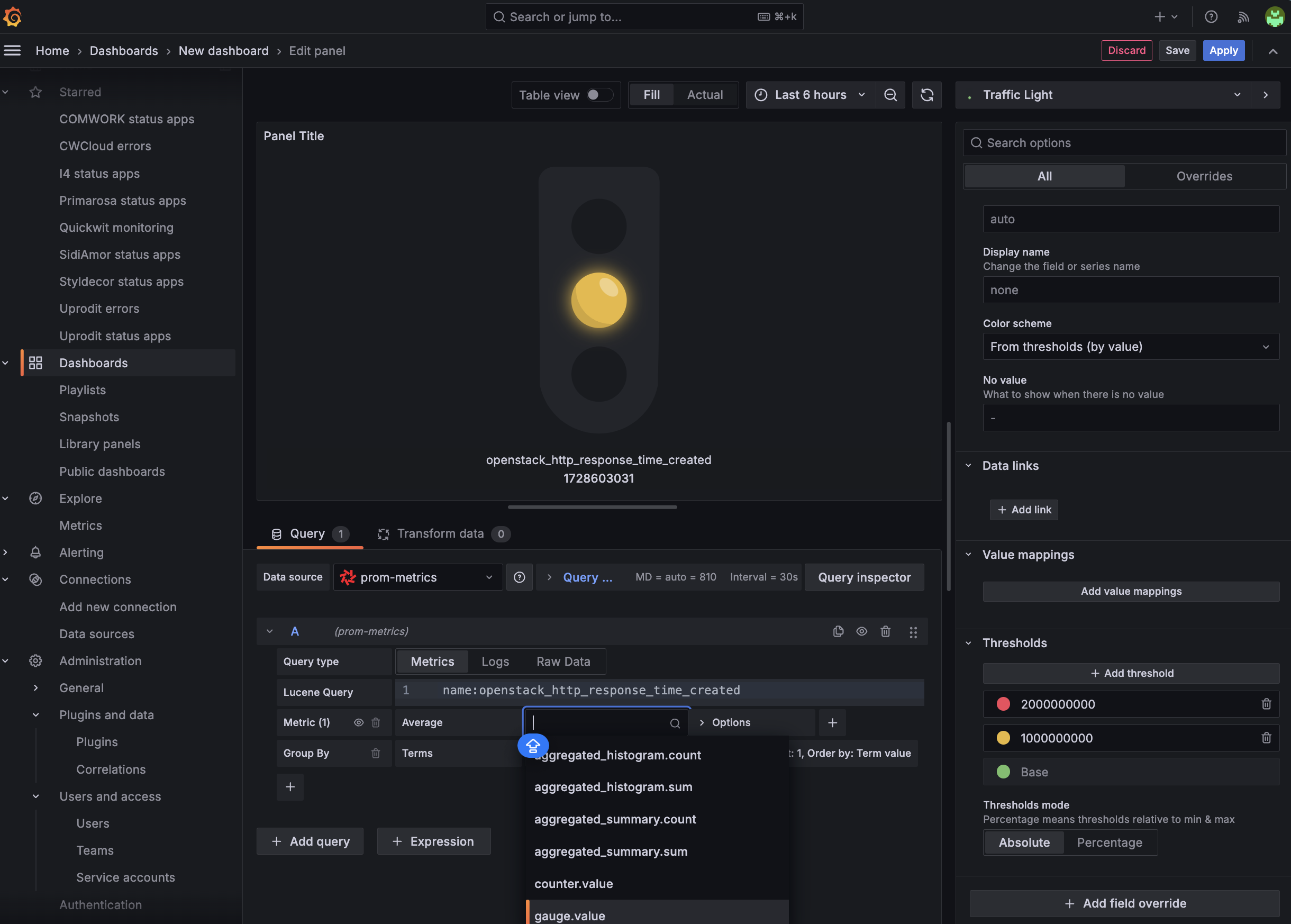

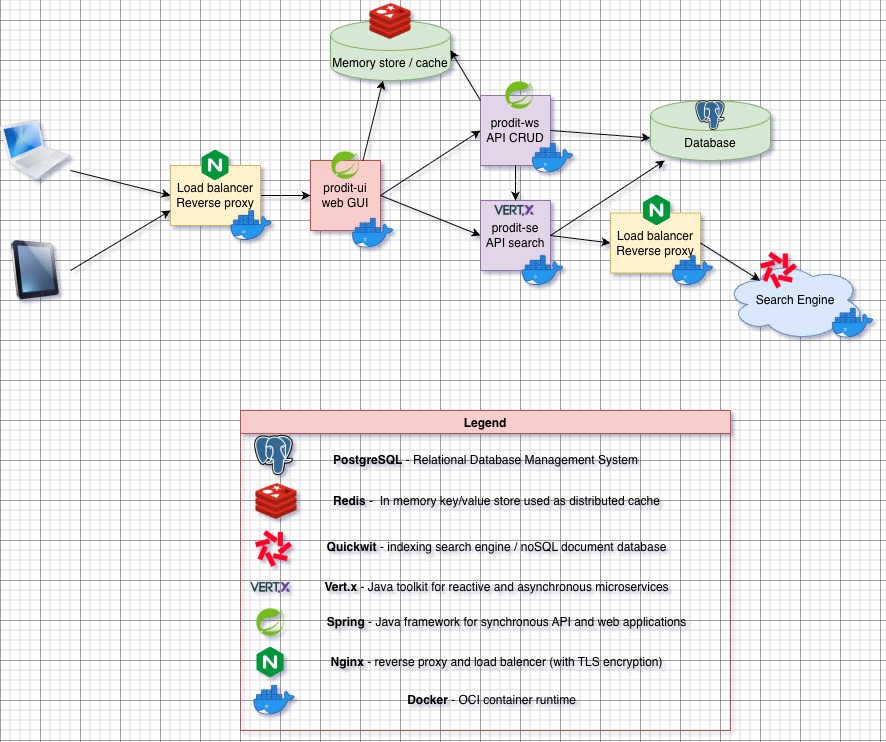

As you may know, since we mentioned it in a previous blog post, Quickwit provides an _elastic endpoint that is interoperable with the Elasticsearch and OpenSearch APIs. The idea, therefore, was to replace Elasticsearch while keeping the existing Vert.x Java code with minimal changes, like this:

In other words, we wanted to keep the Elasticsearch client library to build the queries dynamically with the Elasticsearch DSL3. In this blog post, we'll detail the few key point to succeed the migration.

Keeping the Elasticsearch client library

As we mentioned before we wanted to have the less code rewriting possible, so we choose to keep the Elasticsearch client library to benefit from the DSL builder. Here's the maven dependancy (in the pom.xml file):

<dependency>

<groupId>co.elastic.clients</groupId>

<artifactId>elasticsearch-java</artifactId>

<version>9.2.4</version>

</dependency>

Creating the Quickwit client

We created a IQuickwitService interface because we may have multiple implementations using other http client later:

package tn.prodit.network.se.utils.singleton.quickwit;

import io.vertx.core.json.JsonObject;

import java.io.Serializable;

public interface IQuickwitService {

<T extends Serializable> void index(String index, String id, T document);

<T extends Serializable> void delete(String index, String id);

String search(String indeix, JsonObject query);

}

Then the default implementation using both Vert.x asynchronous http client for the write methods (index and delete) and default java http client for the search method.

import io.vertx.core.Vertx;

import io.vertx.core.http.HttpClient;

import io.vertx.core.http.HttpClientOptions;

import io.vertx.core.http.HttpClientResponse;

import io.vertx.core.http.HttpMethod;

import io.vertx.core.json.JsonObject;

import tn.prodit.network.se.utils.singleton.IPropertyReader;

import tn.prodit.network.se.utils.singleton.quickwit.IQuickwitService;

import tn.prodit.network.se.utils.singleton.quickwit.QuickwitTimeout;

import jakarta.inject.Inject;

import jakarta.inject.Singleton;

import java.net.URI;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.nio.charset.StandardCharsets;

import java.time.Duration;

import java.util.Arrays;

import java.util.Base64;

import static org.apache.commons.lang3.StringUtils.isBlank;

@Singleton

public class QuickwitService implements IQuickwitService {

private static final Logger LOGGER = LogManager.getLogger(QuickwitService.class);

@Inject

private IPropertyReader reader;

@Inject

private Vertx vertx;

private HttpClient client;

private java.net.http.HttpClient syncClient;

private String basicAuth;

private QuickwitTimeout timeout;

private String uri;

private QuickwitTimeout getTimeout() {

if (null == this.timeout) {

this.timeout = QuickwitTimeout.load(reader);

}

return this.timeout;

}

public String getUri() {

if (null == this.uri) {

String host = reader.getQuietly(APP_CONFIG_FILE, QW_KEY_HOST);

Integer port = reader.getIntQuietly(APP_CONFIG_FILE, QW_KEY_PORT);

String scheme = reader.getBoolQuietly(APP_CONFIG_FILE, QW_KEY_TLS) ? "https" : "http";

this.uri = String.format("%s://%s:%s", scheme, host, port);

}

return this.uri;

}

private java.net.http.HttpClient getSyncClient() {

if (null == this.syncClient) {

QuickwitTimeout timeout = getTimeout();

this.syncClient = java.net.http.HttpClient.newBuilder()

.connectTimeout(Duration.ofMillis(timeout.getConnectTimeout()))

.followRedirects(java.net.http.HttpClient.Redirect.NORMAL).build();

}

return this.syncClient;

}

private HttpClient getClient() {

if (null == this.client) {

String host = reader.getQuietly(APP_CONFIG_FILE, QW_KEY_HOST);

Integer port = reader.getIntQuietly(APP_CONFIG_FILE, QW_KEY_PORT);

Boolean tls = reader.getBoolQuietly(APP_CONFIG_FILE, QW_KEY_TLS);

QuickwitTimeout timeout = getTimeout();

this.client = vertx.createHttpClient(new HttpClientOptions().setDefaultHost(host)

.setDefaultPort(port).setSsl(tls).setConnectTimeout(timeout.getConnectTimeout())

.setReadIdleTimeout(timeout.getReadTimeoutInSec()));

}

return this.client;

}

private String getBasicAuth() {

if (isBlank(this.basicAuth)) {

String user = reader.getQuietly(APP_CONFIG_FILE, QW_KEY_USER);

String password = reader.getQuietly(APP_CONFIG_FILE, QW_KEY_PASSWORD);

this.basicAuth = String.format("Basic %s", Base64.getEncoder().encodeToString(String.format("%s:%s", user, password).getBytes(StandardCharsets.UTF_8)));

}

return this.basicAuth;

}

@Override

public <T extends Serializable> void index(String index, String id, T document) {

String url = String.format("/api/v1/%s/ingest", index);

getClient().request(HttpMethod.POST, url, ar -> {

if (ar.failed()) {

LOGGER.error("[quickwit][index] Request creation failure: " + ar.cause().getMessage(),

ar.cause());

return;

}

JsonObject payload = JsonObject.mapFrom(document);

ar.result().putHeader("Content-Type", "application/json")

.putHeader("Accept", "application/json").putHeader("Authorization", getBasicAuth())

.send(payload.toBuffer(), resp -> {

if (resp.failed()) {

LOGGER.error("[quickwit][index] Request sending failure: " + ar.cause().getMessage(), ar.cause());

return;

}

HttpClientResponse response = resp.result();

response.bodyHandler(body -> {

String logMessage = String.format("[quickwit][index] response url = %s, code = %s, body = %s, payload = %s", url, response.statusCode(), body.toString(), payload);

if (response.statusCode() < 200 || response.statusCode() >= 400) {

LOGGER.error(logMessage);

} else {

LOGGER.debug(logMessage);

}

});

});

});

}

@Override

public <T extends Serializable> void delete(String index, String id) {

String url = String.format("/api/v1/%s/delete-tasks", index);

getClient().request(HttpMethod.POST, url, ar -> {

if (ar.failed()) {

LOGGER.error("[quickwit][delete] Request creation failure: " + ar.cause().getMessage(), ar.cause());

return;

}

JsonObject payload = new JsonObject();

payload.put("query", "id:" + id);

payload.put("search_fields", Arrays.asList("id"));

ar.result().putHeader("Content-Type", "application/json")

.putHeader("Accept", "application/json").putHeader("Authorization", getBasicAuth())

.send(payload.toBuffer(), resp -> {

if (resp.failed()) {

LOGGER.error("[quickwit][delete] Request sending failure: " + ar.cause().getMessage(), ar.cause());

return;

}

HttpClientResponse response = resp.result();

response.bodyHandler(body -> {

String logMessage = String.format("[quickwit][delete] response url = %s, code = %s, body = %s, payload = %s", url, response.statusCode(), body.toString(), payload);

if (response.statusCode() < 200 || response.statusCode() >= 400) {

LOGGER.error(logMessage);

} else {

LOGGER.debug(logMessage);

}

});

});

});

}

@Override

public String search(String index, JsonObject query) {

String path = String.format("/api/v1/_elastic/%s/_search", index);

HttpRequest request =

HttpRequest.newBuilder().timeout(Duration.ofMillis(getTimeout().getReadTimeout()))

.uri(URI.create(String.format("%s%s", getUri(), path)))

.header("Content-Type", "application/json").header("Accept", "application/json")

.header("Authorization", getBasicAuth())

.POST(HttpRequest.BodyPublishers.ofString(query.toString())).build();

try {

HttpResponse<String> response =

getSyncClient().send(request, HttpResponse.BodyHandlers.ofString());

return response.body();

} catch (IOException | InterruptedException e) {

LOGGER.error(String.format("[quickwit][search] Unexpected exception e.type = %s, e.msg = %s",

e.getClass().getSimpleName(),

e.getMessage()));

return null;

}

}

}

Adding an abstraction layer

To make a smooth transition, we created two interfaces with an Elasticsearch implementation then replacing by a Quickwit implementation using dependancy injection4.

Indexing interface

For the the writing part, here's the interface had two implementations (an Elasticsearch's one and a Quickwit's one).

import tn.prodit.network.se.indexation.adapter.IndexedDocAdapater;

import java.io.IOException;

import java.io.Serializable;

public interface IIndexer {

<T extends Serializable> void index(IndexedDocAdapater<T> adapter, String id, T document) throws IOException;

<T extends Serializable> void delete(IndexedDocAdapater<T> adapter, String id) throws IOException;

}

And here's the Quickwit implementation:

package tn.prodit.network.se.utils.singleton.indexer.impl;

import tn.prodit.network.se.indexation.adapter.IndexedDocAdapater;

import tn.prodit.network.se.pojo.IQuickwitSerializable;

import tn.prodit.network.se.utils.singleton.indexer.IIndexer;

import tn.prodit.network.se.utils.singleton.quickwit.IQuickwitService;

import jakarta.inject.Inject;

import jakarta.inject.Named;

import jakarta.inject.Singleton;

@Singleton

@Named("quickwitIndexer")

public class QuickwitIndexer implements IIndexer {

@Inject

private IQuickwitService quickwit;

@Override

public <T extends Serializable> void index(IndexedDocAdapater<T> adapter, String id, T document)

throws IOException {

quickwit.index(adapter.getIndexFullName(),

id,

document instanceof IQuickwitSerializable ? ((IQuickwitSerializable<?>) document).to()

: document);

}

@Override

public <T extends Serializable> void delete(IndexedDocAdapater<T> adapter, String id) throws IOException {

quickwit.delete(adapter.getIndexFullName(), id);

}

}

And the implementation using the quickwit client:

import tn.prodit.network.se.indexation.adapter.IndexedDocAdapater;

import tn.prodit.network.se.pojo.IQuickwitSerializable;

import tn.prodit.network.se.utils.singleton.indexer.IIndexer;

import tn.prodit.network.se.utils.singleton.quickwit.IQuickwitService;

import jakarta.inject.Inject;

import jakarta.inject.Named;

import jakarta.inject.Singleton;

@Singleton

@Named("quickwitIndexer")

public class QuickwitIndexer implements IIndexer {

@Inject

private IQuickwitService quickwit;

@Override

public <T extends Serializable> void index(IndexedDocAdapater<T> adapter, String id, T document)

throws IOException {

quickwit.index(adapter.getIndexFullName(),

id,

document instanceof IQuickwitSerializable ? ((IQuickwitSerializable<?>) document).to()

: document);

}

@Override

public <T extends Serializable> void delete(IndexedDocAdapater<T> adapter, String id)

throws IOException {

quickwit.delete(adapter.getIndexFullName(), id);

}

}

You can observe that we introduced the IQuickwitSerializable interface for value objects (VO)5 because some types are not fully supported by Vert.x and therefore cannot be serialized directly. For example, lists of nested objects are not properly handled.

Let’s imagine we have a List<SkillVO> skills, which is a nested list of objects. To address this limitation, we had to duplicate the data in the index mapping using two array<text> fields: one containing only the searchable subfields (such as name), and another preserving the full object structure so it can be unmarshalled correctly and remain interoperable.

{

"indexed": true,

"fast": true,

"name": "searchableSkills",

"type": "array<text>",

"tokenizer": "raw"

},

{

"indexed": false,

"name": "skills",

"type": "array<text>"

}

Then, on the original VO class, we had to implement the to() method of the IQuickwitSerializable interface like this:

class PersonalProfileVO implements IQuickwitSerializable<QuickwitPersonalProfile> {

private List<SkillVO> skills;

public List<SkillVO> getSkills() {

return this.skills;

}

public void setSkills(List<SkillVO> skills) {

this.skills = skills;

}

@Override

public QuickwitPersonalProfile to() {

QuickwitPersonalProfile dest = new QuickwitPersonalProfile();

if (null != this.getSkills()) {

dest.setSkills(this.getSkills().stream().map(s -> JSONUtils.objectTojsonQuietly(s, SkillVO.class)).collect(Collectors.toList()));

// we will perform the search query only on the name

dest.setSearchableSkills(this.getSkills().stream().map(s -> s.getName()).collect(Collectors.toList()));

}

return dest;

}

}

Then duplicate the VO class and implements the to() the other way arround:

class QuickwitPersonalProfile implements IQuickwitSerializable<PersonalProfileVO> {

private List<String> skills;

private List<String> searchableSkills;

public List<String> getSkills() {

return this.skills;

}

public void setSkills(List<String> skills) {

this.skills = skills;

}

public List<String> getSearchableSkills() {

return this.searchableSkills;

}

public void setSearchableSkills(List<String> searchableSkills) {

this.searchableSkills = searchableSkills;

}

@Override

public PersonalProfileVO to() {

PersonalProfileVO dest = new PersonalProfileVO();

if (null != this.getSkills()) {

dest.setSkills(this.getSkills().stream().map(s -> JSONUtils.json2objectQuietly(s, SkillVO.class)).collect(Collectors.toList()));

}

return dest;

}

}

Reading interface

For the reading part, here's the interface which also had two implementations (an Elasticsearch's one and a Quickwit's one).

import co.elastic.clients.elasticsearch._types.SortOrder;

import co.elastic.clients.elasticsearch._types.query_dsl.BoolQuery;

import co.elastic.clients.elasticsearch.core.SearchRequest;

import co.elastic.clients.json.jackson.JacksonJsonpGenerator;

import co.elastic.clients.json.jackson.JacksonJsonpMapper;

import com.fasterxml.jackson.core.JsonFactory;

import jakarta.json.stream.JsonGenerator;

import tn.prodit.network.se.pojo.SearchResult;

import tn.prodit.network.se.pojo.fields.IFields;

public interface ISearcher {

JacksonJsonpMapper mapper = new JacksonJsonpMapper();

<T extends Serializable> SearchResult<T> search(String index,

BoolQuery query,

Optional<Sort> sort,

Integer startIndex,

Integer maxResults,

Class<T> clazz);

<T extends IFields> SearchResult<String> search(String index,

List<String> fields,

List<String> criteria,

Integer startIndex,

Integer maxResults,

Class<T> clazz);

static String toJson(SearchRequest request, JacksonJsonpMapper mapper) {

JsonFactory factory = new JsonFactory();

StringWriter jsonObjectWriter = new StringWriter();

try {

JsonGenerator generator = new JacksonJsonpGenerator(factory.createGenerator(jsonObjectWriter));

request.serialize(generator, mapper);

generator.close();

return jsonObjectWriter.toString();

} catch (IOException e) {

return null;

}

}

default JacksonJsonpMapper getMapper() {

return mapper;

}

default String toJson(BoolQuery query) {

return toJson(query, getMapper());

}

}

Notes:

- you can override the default method

getMapper()with an already instanciated mapper - the

toJson()method will allow the Quickwit implementation to transform theSearchRequestobject into a String containing json that will be sent to the_elasticendpoint

Then, the implementation for Quickwit were pretty straight forward:

import co.elastic.clients.elasticsearch._types.query_dsl.BoolQuery;

import co.elastic.clients.elasticsearch.core.SearchRequest;

import io.vertx.core.json.JsonArray;

import io.vertx.core.json.JsonObject;

import tn.prodit.network.se.pojo.IQuickwitSerializable;

import tn.prodit.network.se.pojo.SearchResult;

import tn.prodit.network.se.pojo.fields.IFields;

import tn.prodit.network.se.utils.singleton.quickwit.IQuickwitService;

import tn.prodit.network.se.utils.singleton.searcher.ISearcher;

import tn.prodit.network.se.utils.singleton.searcher.Sort;

import jakarta.inject.Inject;

import jakarta.inject.Named;

import jakarta.inject.Singleton;

import static org.apache.commons.lang3.StringUtils.isBlank;

import static tn.prodit.network.se.utils.CommonUtils.initSearchResult;

import static tn.prodit.network.se.utils.JSONUtils.json2objectQuietly;

@Singleton

@Named("quickwitSearcher")

public class QuickwitSearcher implements ISearcher {

private static final Logger LOGGER = LogManager.getLogger(QuickwitSearcher.class);

@Inject

private IQuickwitService quickwit;

private JsonObject performSearch(String index,

BoolQuery query,

Optional<Sort> sort,

Integer startIndex,

Integer maxResults) {

SearchRequest r = getSearchRequest(index, sort, startIndex, maxResults, query);

JsonObject jsonQuery = new JsonObject(toJson(r));

String quickwitResponse = quickwit.search(index, jsonQuery);

if (isBlank(quickwitResponse)) {

quickwitResponse = "{\"hits\": {\"hits\": []}}";

}

return new JsonObject(quickwitResponse);

}

@Override

public <T extends Serializable> SearchResult<T> search(String index,

BoolQuery query,

Optional<Sort> sort,

Integer startIndex,

Integer maxResults,

Class<T> clazz) {

SearchResult<T> result = initSearchResult(Long.valueOf(startIndex), Long.valueOf(maxResults));

List<T> elems = new ArrayList<>();

JsonObject quickwitResponse = performSearch(index, query, sort, startIndex, maxResults);

JsonObject hits = quickwitResponse.getJsonObject("hits");

if (null == hits) {

return result;

}

JsonArray hits2 = hits.getJsonArray("hits");

for (int i = 0; i < hits2.size(); i++) {

if (IQuickwitSerializable.class.isAssignableFrom(clazz)) {

JsonObject hit = hits2.getJsonObject(i);

if (null == hit) {

continue;

}

JsonObject source = hit.getJsonObject("_source");

if (null == source || isBlank(source.toString())) {

continue;

}

IQuickwitSerializable<?> obj = (IQuickwitSerializable<?>) json2objectQuietly(source.toString(), IQuickwitSerializable.getSerializationClazz(clazz));

elems.add((T) obj.to());

}

}

result.setResults(elems);

result.setTotalResults(Long.valueOf(elems.size()));

return result;

}

}

Few difficulties to address

Here are some of the challenges we encountered:

- Quickwit ain't replace documents by ID in case of updates, so queries must explicitly filter and select the most recent document version.

- Document deletion is not as immediate as in Elasticsearch, meaning deleted profiles may still appear in search results for a short period of time.

- Quickwit ain't support

regexqueries, which required us to rewrite those queries usingtermormatchqueries instead.

Conclusion

Given the difficulties previously mentioned, it's understandable that many applications may argue that Quickwit ain't the best solution for this use case. However, in the context of uprodit, search result relevance ain't need to be perfect considering the significant infrastructure cost savings we achieved with this migration.

Footnotes

-

Before it was acquired by datadog ↩

-

The

prodit-semodule was already written in Vert.x using Google Juice and JSR330 (javax.injectpackage) ↩