CWCloud AI

Purpose

This feature aims to expose AI1 models such as LLM2 to be exposed as a normalized endpoint.

Here's a quick demo of what you can achieve with this API:

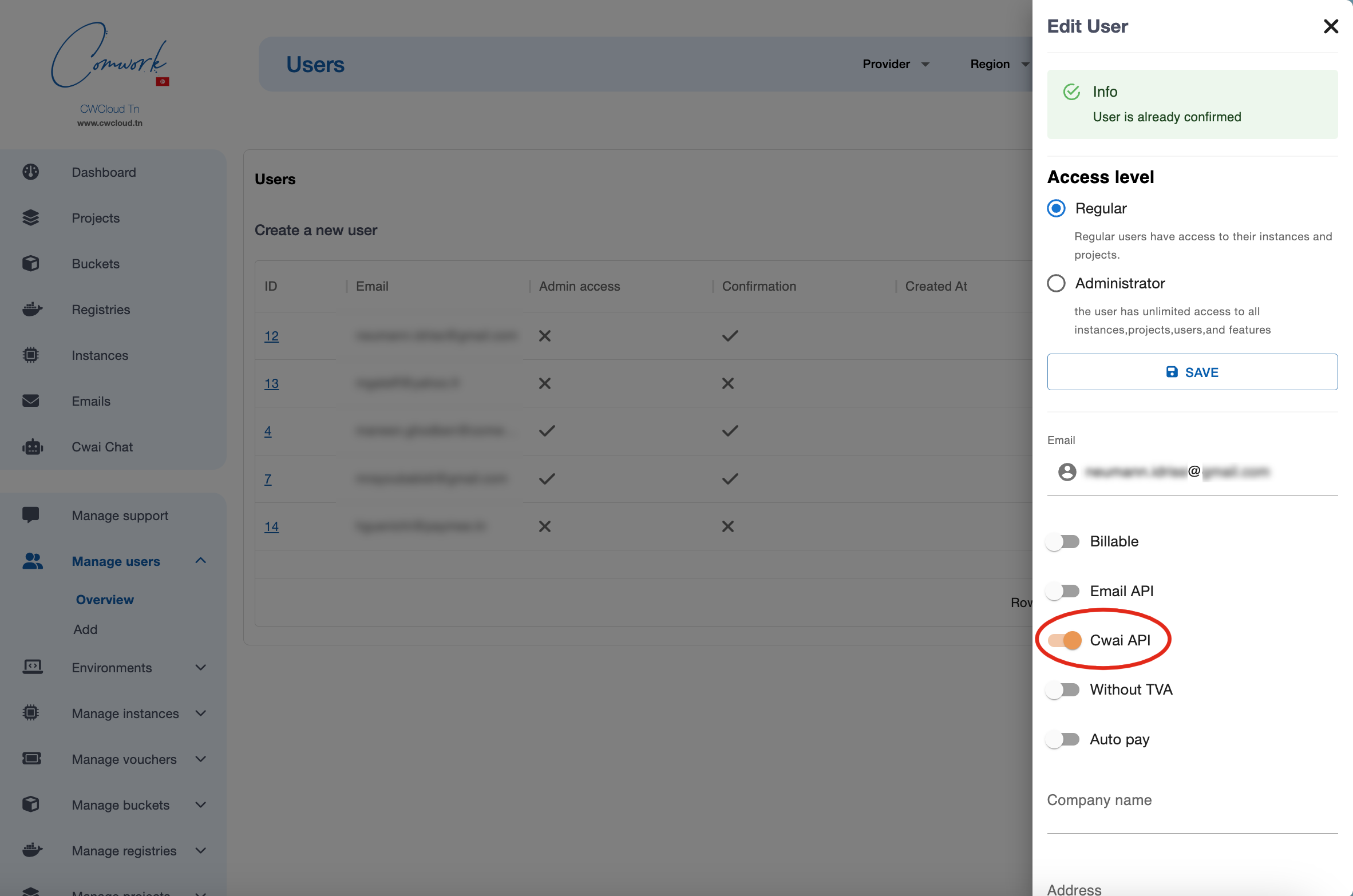

Enabling this API

In the SaaS version, you can ask to be granted using the support system.

If you're admin of the instance, you can grant users like this:

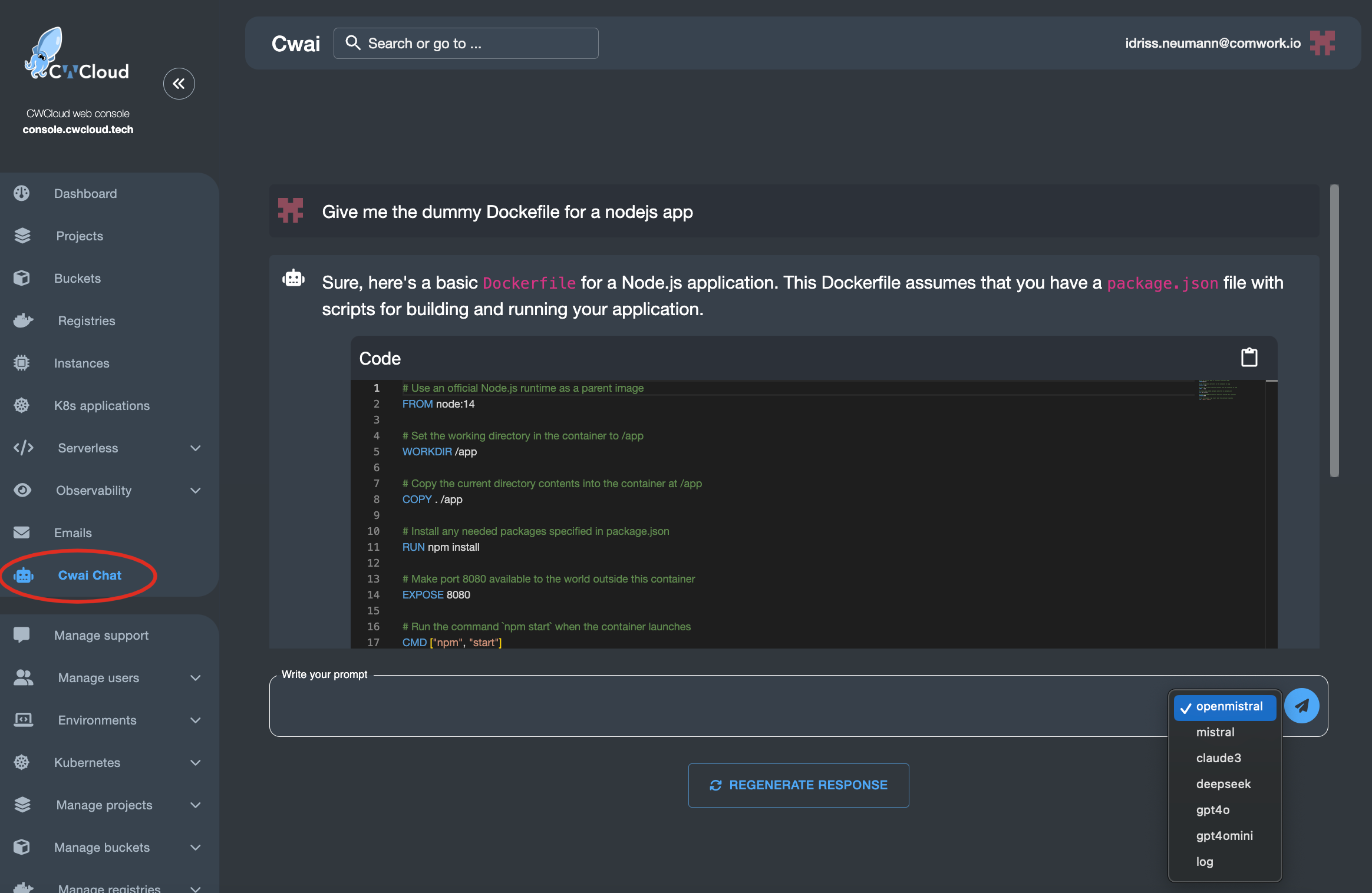

UI chat

Once you're enabled, you can try the CWAI api using this chat web UI:

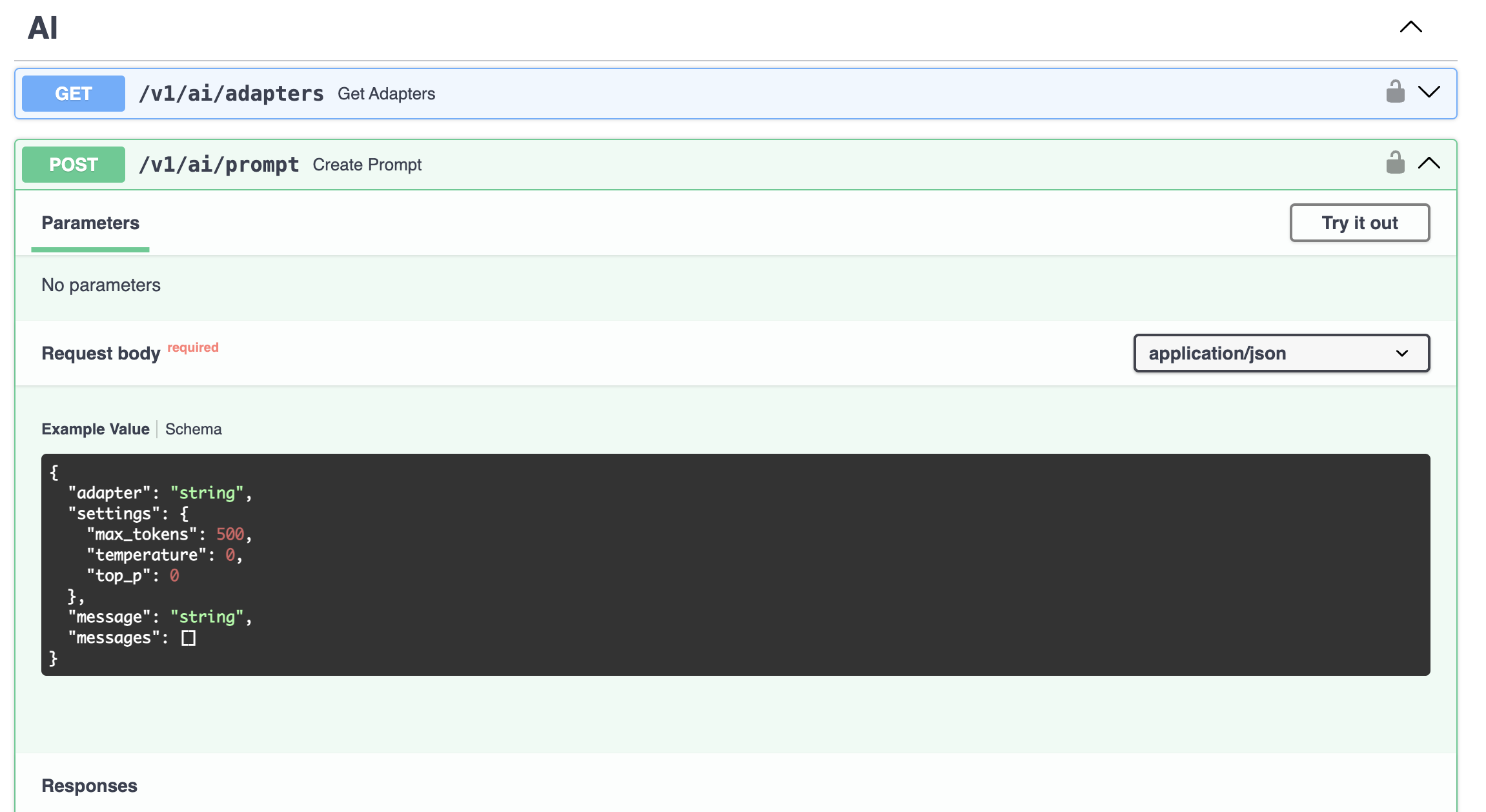

Use the API

Of course, the main purpose is to be able to interact with those adapteurs using very simple http endpoints:

Here's how to get all the available adapters:

curl -X 'GET' 'https://api.cwcloud.tech/v1/ai/adapters' -H 'accept: application/json' -H 'X-Auth-Token: XXXXXX'

Result:

{

"adapters": [

"openmistral",

"mistral",

"claude3",

"deepseek",

"gpt4o",

"gpt4omini",

"gemini",

"log"

],

"status": "ok"

}

Then prompting with one of the available adapters:

curl -X 'POST' \

'https://api.cwcloud.tech/v1/ai/prompt' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'X-Auth-Token: XXXXXX' \

-d '{

"adapter": "gpt4o",

"message": "Hey",

"settings": {}

}'

The answer would be:

{

"response": [

"Hello! How can I assist you today with cloud automation or deployment using CWCloud?"

],

"status": "ok"

}

Notes:

- you have to replace the

XXXXXXvalue with your own token generated with this procedure. - you can replace

https://api.cwcloud.techby the API's instance URL you're using, with theCWAI_API_URLenvironment variable. For the Tunisian customers for example, it would behttps://api.cwcloud.tn. - it's possible to pass multiple prompts associated with roles (

systemanduser) like this:

curl -X 'POST' \

'https://api.cwcloud.tech/v1/ai/prompt' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'X-Auth-Token: XXXXXX' \

-d '{

"adapter": "gpt4o",

"messages": [

{

"role": "system",

"message": "You're a tech assistant"

},

{

"role": "user",

"message": "I need help"

}

],

"settings": {}

}'

Use the CLI

You can use the cwc CLI which provide a subcommand ai:

cwc ai

This command lets you call the CWAI endpoints

Usage:

cwc ai

cwc ai [command]

Available Commands:

adapters Get the available adapters

prompt Send a prompt

Flags:

-h, --help help for ai

Use "cwc ai [command] --help" for more information about a command.

List the available adapters

cwc ai adapter ls

openmistral

mistral

claude3

deepsick

gpt4o

gpt4omini

log

Note: The available adapters are not the default ones, but also the external ones created by other users and made public. To see the external adapter created by you, you can add the -e or --external flag.

Send a prompt to an available adapter

$ cwc ai prompt

Error: required flag(s) "adapter", "message" not set

Usage:

cwc ai prompt [flags]

cwc ai prompt [command]

Available Commands:

details Get details about a prompt list

history Get prompt history

Flags:

-a, --adapter string The chosen adapter

-h, --help help for prompt

-l, --list string Optional list ID

-m, --message string The message input

-t, --adapter string The chosen adapter

-l, --list string The list id (optional)

cwc ai prompt --adapter gpt4o --message "Hey"

Status Response ListId

ok Hello! How can I assist you today? d4248b8e-84d5-40cf-8ca8-b6375fc731ee

View prompt history

cwc ai prompt history

ID Title Updated At Created At

f81f6dc5-e023-4bc9-9f43-5283f0b6d749 hey 2025-03-18T10:33:07.030558 2025-03-18T10:33:07.030543

You can customize it more with the following flags:

cwc ai prompt history --max 10 --start 0 --pretty

+--------------------------------------+-------+----------------------------+----------------------------+

| ID | TITLE | UPDATED AT | CREATED AT |

+--------------------------------------+-------+----------------------------+----------------------------+

| d4248b8e-84d5-40cf-8ca8-b6375fc731ee | Hey | 2025-03-18T14:40:33.439588 | 2025-03-18T14:40:33.439578 |

| 94693bdb-c4bf-48e2-bb6e-385cfe017197 | Hey | 2025-03-18T14:38:55.739063 | 2025-03-18T14:38:55.739054 |

| f81f6dc5-e023-4bc9-9f43-5283f0b6d749 | hi | 2025-03-18T10:33:07.030558 | 2025-03-18T10:33:07.030543 |

| 08e99e78-0fe3-45c5-ada7-94c0a3fd0a2c | hello | 2025-03-18T10:16:10.271032 | 2025-03-18T10:16:10.271018 |

+--------------------------------------+-------+----------------------------+----------------------------+

View prompt list details

go run main.go ai prompt details --list f81f6dc5-e023-4bc9-9f43-5283f0b6d749

7a1a0f48-50c9-4cda-b4fc-e06056e73c97 hi Hello! How can I assist you today?

You can make it more humanly readable with the pretty flag:

cwc ai prompt details --list f81f6dc5-e023-4bc9-9f43-5283f0b6d749 --pretty

Prompt List: hi

ID: f81f6dc5-e023-4bc9-9f43-5283f0b6d749

Created: 2025-03-18T10:33:07.030543

Updated: 2025-03-18T10:33:07.030558

Prompts (1):

--- Prompt 1 ---

ID: 7a1a0f48-50c9-4cda-b4fc-e06056e73c97

Adapter: gpt4o

Created: 2025-03-18T10:33:07.335824

Message: hi

Response: Hello! How can I assist you today?

Usage: 18 tokens (Prompt: 8, Completion: 10)

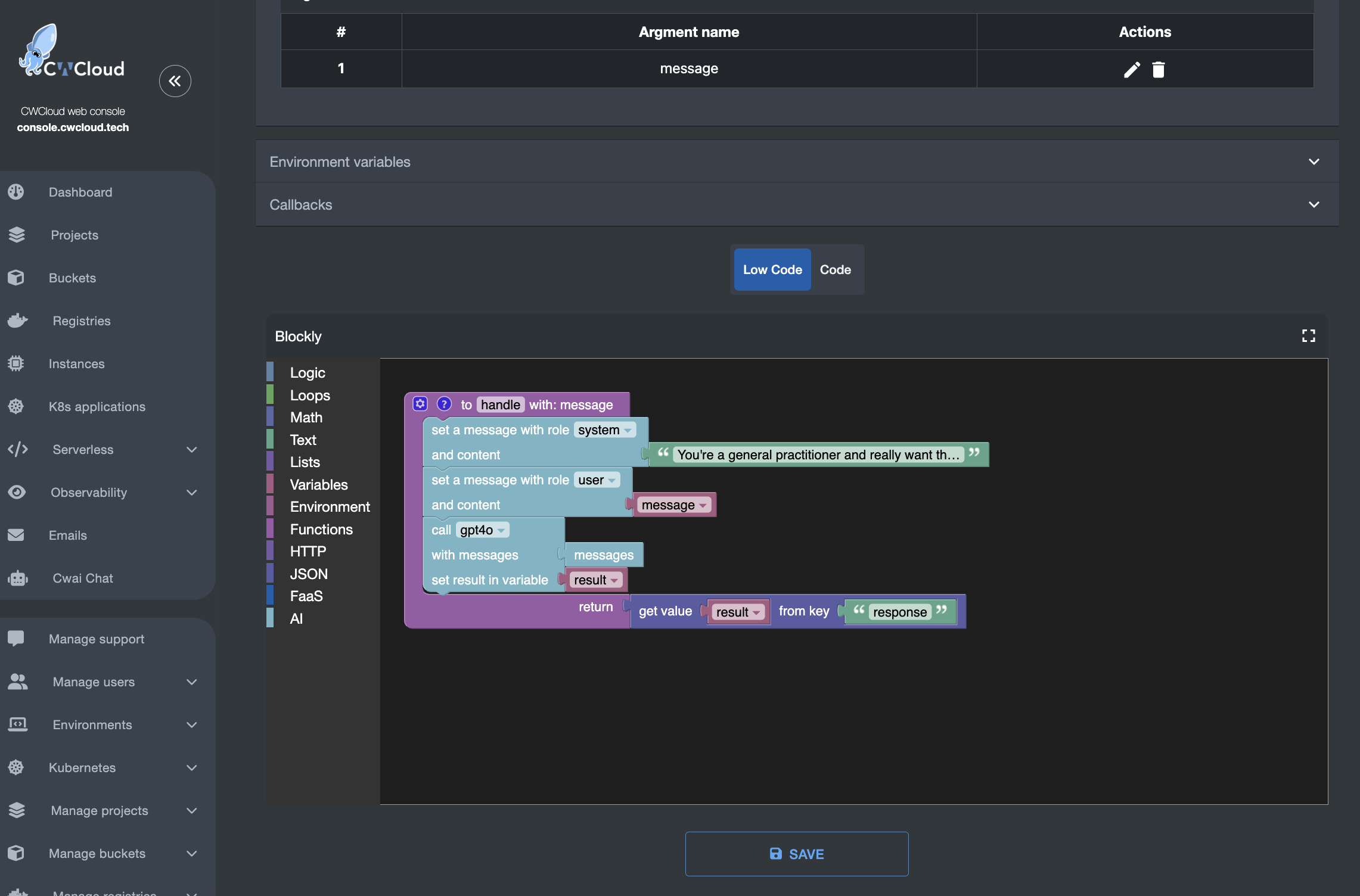

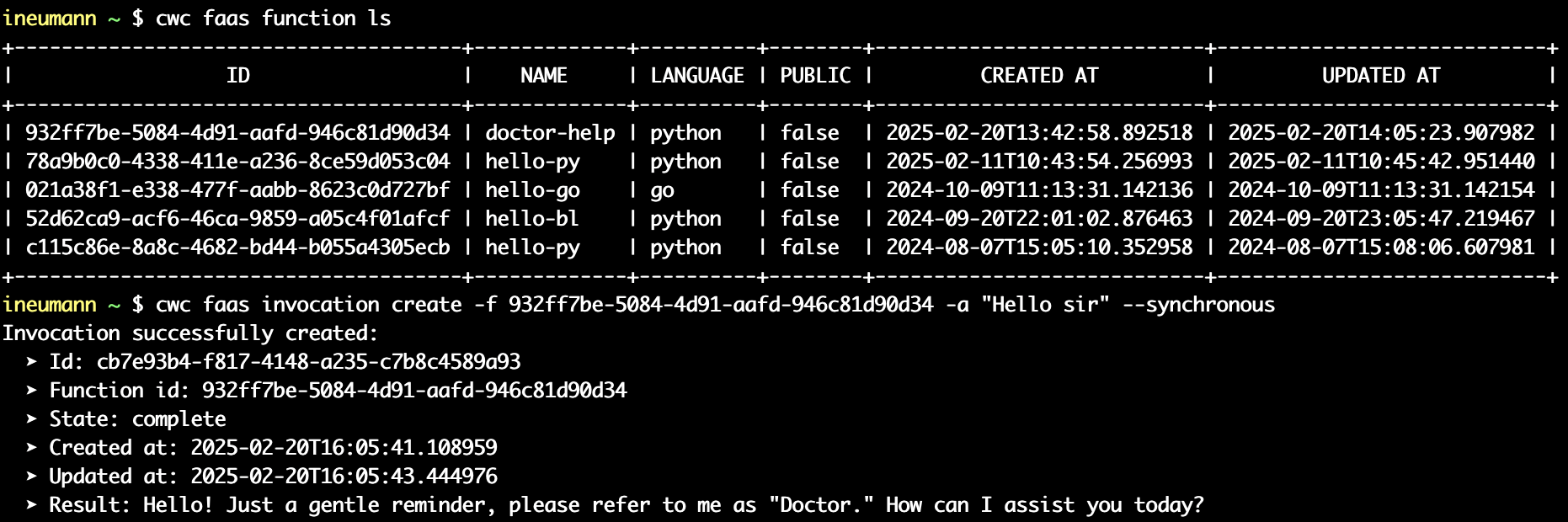

Using the FaaS engine

You can use the FaaS/low code engine to expose an AI assistant as a service like this:

Then invoke you're FaaS function as usual:

External adapter interface

An external adapter is a microservice which is the interface with your own LLMs you can deploy anywhere you want and CWCloud.

Those LLMs will be useable via the CWAI API, the chat or the serverless engine like those available by default but at your own infrastructure costs (for example you can use cwcloud instances to deploy it).

You external adapter will have to expose an http POST endpoint and be able to take this kind of json payload as body:

{

"adapter": "test",

"settings": {

"max_tokens": 500

},

"message": "A prompt",

"regenerate": false,

"enable_history": true

}

Or this kind of payload with multiple prompts associated with roles:

{

"adapter": "test",

"settings": {

"max_tokens": 500

},

"messages": [

{

"role": "system",

"message": "You're a tech assistant"

},

{

"role": "user",

"message": "I need help"

}

],

"regenerate": false,

"enable_history": true

}

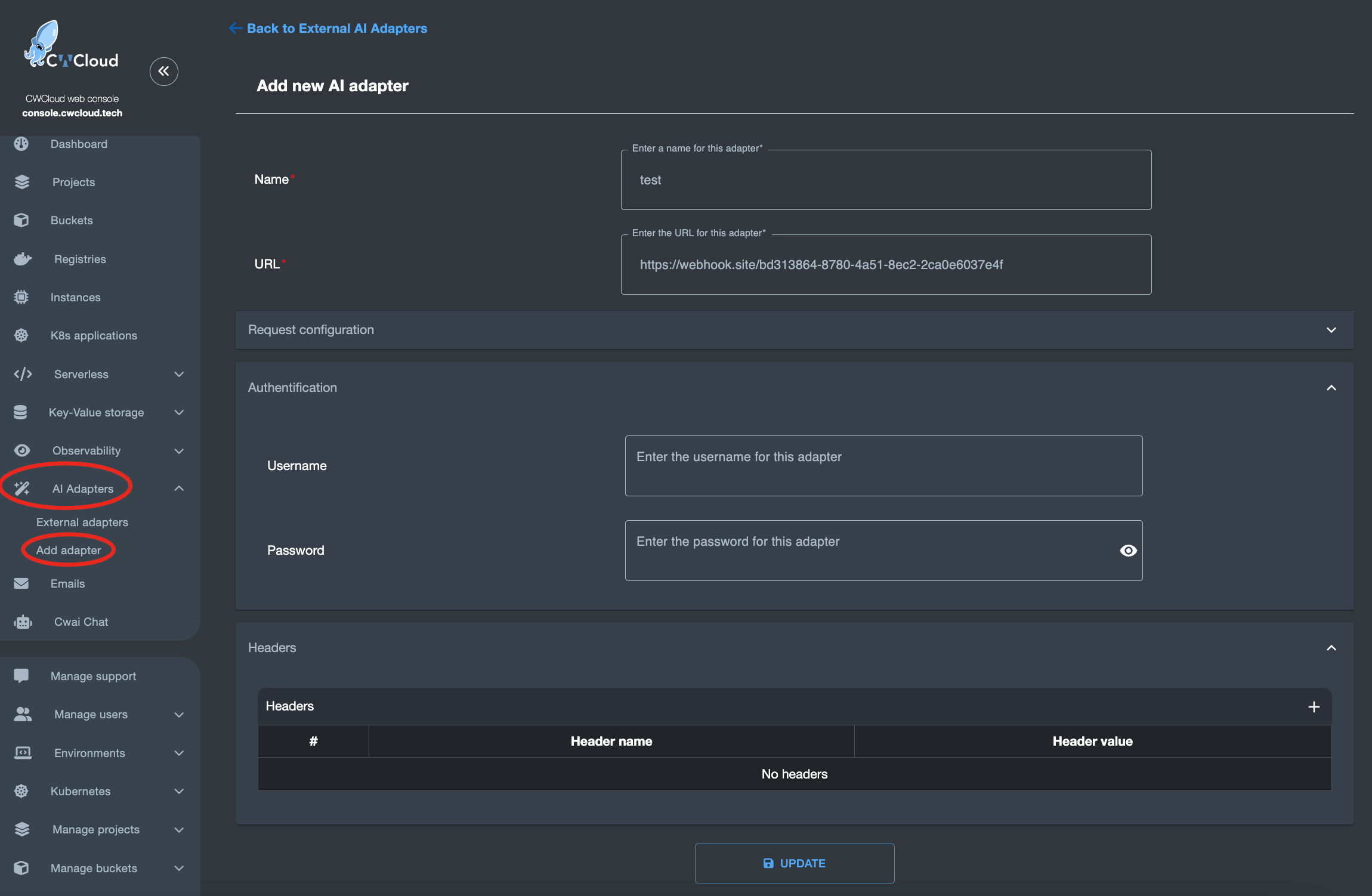

Then it has to be exposed to CWCloud in order to be associated with this interface:

Note: you can configure an http basic authentication or pass custom header including Authorization header.